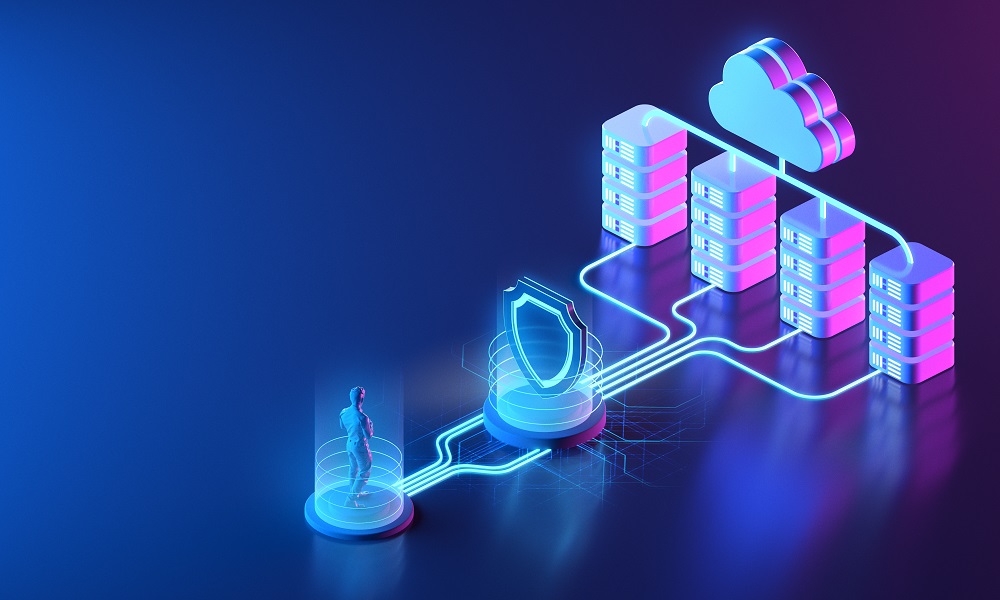

The world has went digitized where every single requirements is been satisfied with few finger touches on their mobile screen or other digital screens. Digital world has given space for several businesses which has also given a room for cyber theft and hackers. The abruptly growing digitized world is undoubtedly assisting businesses to grow till sky high level but on the other hand it is also putting all those businesses at a higher risk of cyber-attacks as most of these businesses are based on crucial and confidential data. These businesses can be protected from such attacks and thefts with the help of big data.

Cyber security threats:

Corporate security word is almost disappeared from the business world and it is because of growing interconnectivity of digital devices using internet. Data protection was ever a concern for so many businesses and it was ever done with great tools, but in the recent hi-tech world the potential of traditional data security tools are not enough to secure it from various threats open from distinct vertexes. Now we need a tool which is smart and can detect malicious activities in the network at a very prior stage and can put an end to the treat before it penetrates in the loop.

How big data analytics is a perfect fit for cyber security?

Cyber security demands a thorough check on the data saved and also a sharp keen eye on the data detection. Here comes in the importance and play of big data. The data security needs complex data analysis, real time check, correlation of various data available and other activities. These activities require advanced analytics and large data processing and this can be finely done by using big data analytics and management. Big data security analysis is one of the most potent tools for data security and is capable of facing all the challenges offers by the latest digital platforms and advancements.