Trending →

Investing in Bitcoin: Is Now the Right Time to Enter the Market? Expert Insights & Analysis

Determining whether now is the right time to enter the Bitcoin market requires careful consideration of various factors, including market trends, price dynamics, fundamental analysis, and individual investment objectives. While…

Recent Post →

HealthTech CEO's

Virtual Reality Therapy: Harnessing Immersive Technology to Address Mental Health Challenges, Psychological Disorders, and Pain Management in Healthcare Settings

Virtual Reality (VR) therapy harnesses immersive technology to address mental health challenges, psychological disorders, and pain management in healthcare settings. Here's how VR therapy works…

Interoperability in Healthcare: Overcoming Technical, Organizational, and Regulatory Hurdles to Achieve Seamless Data Exchange, Care Coordination, and Patient-Centric Healthcare Delivery

Interoperability in healthcare is essential for achieving seamless data exchange, care coordination, and patient-centric healthcare…

Healthcare Data Analytics: Leveraging Big Data, Machine Learning, and Predictive Analytics to Drive Insights, Improve Population Health, and Enhance Clinical Decision-Making

Healthcare data analytics harnesses big data, machine learning, and predictive analytics to drive insights, improve…

Securing Healthcare Data: Addressing Cybersecurity Threats, Compliance Requirements, and Data Protection Measures in an Era of Increasing Digitalization

Securing healthcare data is paramount in an era of increasing digitalization to protect patient privacy,…

Augmented Reality in Medical Education: Transforming Learning Environments, Simulation Training, and Surgical Planning with Immersive Visualization Technologies

Augmented Reality (AR) is revolutionizing medical education by transforming learning environments, simulation training, and surgical…

Healthcare Chatbots: Improving Patient Engagement, Access to Information, and Administrative Efficiency through AI-Powered Conversational Interfaces

Healthcare chatbots are AI-powered conversational interfaces that play a crucial role in improving patient engagement,…

5G Connectivity in Healthcare: Accelerating Telemedicine Adoption, Remote Monitoring, and Point-of-Care Diagnostics with Ultra-Fast, Low-Latency Networks

5G connectivity in healthcare is poised to revolutionize telemedicine adoption, remote monitoring, and point-of-care diagnostics…

Bioprinting: Pioneering the Future of Organ Transplantation, Tissue Engineering, and Personalized Medicine through 3D Printing of Biological Constructs

Bioprinting is revolutionizing the fields of organ transplantation, tissue engineering, and personalized medicine by enabling…

Digital Therapeutics: Harnessing Evidence-Based Interventions, Behavioral Modification Techniques, and Remote Monitoring for Chronic Disease Management

Digital therapeutics (DTx) harness evidence-based interventions, behavioral modification techniques, and remote monitoring technologies to manage…

Cybersecurity Challenges in Medical Devices: Ensuring Patient Safety, Regulatory Compliance, and Data Integrity in the Age of Connected Health Technologies

Cybersecurity challenges in medical devices pose significant risks to patient safety, regulatory compliance, and data…

HEALTHCARE

Transformative Influence: Technology’s Dominance in Reshaping the Healthcare Landscape

A new era of telemedicine has been emerged with the advancements of…

Cybersecurity in Healthcare: Protecting Patient Privacy and Medical Devices

Cybersecurity is a critical concern in healthcare, as the industry increasingly relies…

The Future of Healthcare Technology: Revolutionizing Patient Care

The future of healthcare technology holds tremendous potential for revolutionizing patient care.…

Digital Health Apps and Wearables: Empowering Patients for Self-care

Digital health apps and wearables are empowering patients to take control of…

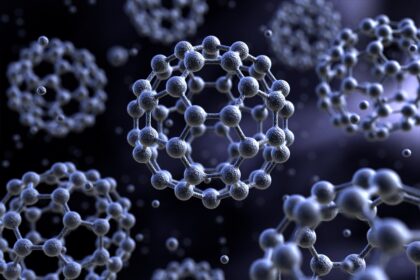

Unveiling the Boundless Potential of Nanotechnology in Revolutionizing the Medical Landscape

The word nano take us to the tiniest shapes but the measurement…

Internet of Medical Things (IoMT): Connected Devices and Healthcare Monitoring

The Internet of Medical Things (IoMT) refers to the network of interconnected…

Medical Tricorder: Pioneering a Paradigm Shift Towards Enhanced Healthcare Efficacy

If you take a survey then five out of every ten folk…

Population Health Management: Leveraging Big Data for Public Health Initiatives

Population health management involves using big data and analytics to understand and…

Enhancing Surgical Efficiency Through Robotics: The Role of Robots in Streamlining Procedures

Surgical robots are not a new name in the medical field. It…

Data Analytics and Predictive Modeling in Healthcare: Improving Patient Outcomes

Data analytics and predictive modeling are playing a significant role in healthcare…

Healthcare and Technology Moving Hand On Hand For The Best Results

Advancements in the medical sector is not hidden from anyone, every single…

Telemedicine and Remote Patient Monitoring: Enhancing Access and Outcomes

Telemedicine and remote patient monitoring (RPM) are innovative healthcare technologies that aim…

Cutting-Edge Technological Solutions Revolutionizing The Healthcare Sector

Healthcare sector is undergoing huge variations in recent days and the potential…

Enhancing Precision and Efficiency in Surgical Practices through Robotics in the Operating Room

Robotics in surgery has emerged as a transformative technology, offering enhanced precision,…

Harness The Power Of Technology In The Medical Sector For Senior Citizens

A common life needs medical assistance in many ways to lead a…

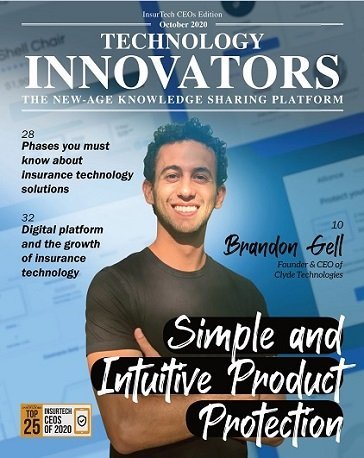

InsurTech CEO's

Elevating Efficiency: Exploring the Role of Robotic Process Automation (RPA) in Streamlining Insurance Operations and Claims Handling

Elevating Efficiency: Exploring the Role of Robotic Process Automation (RPA) in Streamlining Insurance Operations and Claims Handling" delves into how Robotic Process Automation (RPA) is…

Navigating the Regulatory Maze: A Roadmap for Insurance Insiders on Achieving Data Privacy Compliance and Regulatory Alignment

Navigating the Regulatory Maze: A Roadmap for Insurance Insiders on Achieving Data Privacy Compliance and Regulatory Alignment" provides guidance to insurance professionals on navigating the complex landscape…

Reimagining Property Protection: The Integration of IoT Devices and Smart Home Technology in Modern Insurance Solutions

Reimagining Property Protection: The Integration of IoT Devices and Smart Home Technology in Modern Insurance Solutions" explores how insurers are leveraging IoT devices and smart home technology…

Weathering the Storm: Building Climate Resilience Through Advanced Climate Risk Modeling and Insurance Solutions

Weathering the Storm: Building Climate Resilience Through Advanced Climate Risk Modeling and Insurance Solutions" examines the importance of climate resilience and the role of advanced climate risk…

Quantum Leap: Peering into the Future of Insurance Technology with Quantum Computing and Predictive Analytics

Quantum Leap: Peering into the Future of Insurance Technology with Quantum Computing and Predictive Analytics" explores the potential of quantum computing and predictive analytics to revolutionize the…

AI-Powered Fraud Fighters: How Machine Learning Algorithms Are Reducing Insurance Fraud and Improving Claim Accuracy

AI-Powered Fraud Fighters: How Machine Learning Algorithms Are Reducing Insurance Fraud and Improving Claim Accuracy" explores the role of machine learning algorithms in detecting and preventing insurance…

Beyond the Buzzwords: Unraveling the Practical Applications of Insurtech Partnerships and Collaborations for Industry Innovation

Beyond the Buzzwords: Unraveling the Practical Applications of Insurtech Partnerships and Collaborations for Industry Innovation" delves into the tangible applications and real-world impact of insurtech partnerships and…

Next-Generation Claims Management: The Convergence of Insurtech, Digital Transformation, and Customer-Centricity in Claims Services

Next-Generation Claims Management: The Convergence of Insurtech, Digital Transformation, and Customer-Centricity in Claims Services" explores how the integration of Insurtech, digital transformation, and customer-centric approaches is reshaping…

Personalized Protection: Customizing Insurance Products to Address Unique Consumer Needs and Lifestyle Preferences

Personalized Protection: Customizing Insurance Products to Address Unique Consumer Needs and Lifestyle Preferences" explores how insurers are tailoring insurance products to meet the diverse needs and preferences…

Cybersecurity in the Spotlight: Decrypting the Complexities of Insuring Against Cyber Threats in the Digital Age

Cybersecurity in the Spotlight: Decrypting the Complexities of Insuring Against Cyber Threats in the Digital Age" focuses on the evolving landscape of cyber threats and the challenges…

INSURANCE

Telematics and Usage-Based Insurance: Personalizing Auto Coverage with IoT

Telematics and usage-based insurance (UBI) are transforming the auto insurance industry by enabling…

Emerging Trends and Innovations Shaping the Insurance Sector Landscape

Insurance sector was one of those sectors which were untouched from the advancements…

Future Forward: Trends in Insurance Technology Shaping Tomorrow’s Landscape

Insurance was one of the technologies which were totally manual based which was…

Robotic Process Automation in Insurance Technology : Streamlining Administrative Tasks

Robotic Process Automation (RPA) is playing a significant role in insurtech by streamlining…

Insurtech and Customer Experience: Innovations in Policy Management and Claims Handling

Insurtech, which combines insurance and technology, is revolutionizing the insurance industry by introducing…

Climate Change and Insurtech: Adapting Insurance Solutions to Environmental Risks

Climate change has a profound impact on the insurance industry, and insurtech plays…

The Future of Insurance: How Insurtech is Revolutionizing the Industry

Insurtech, the application of technology and innovation in the insurance industry, is revolutionizing…

Smart Home and IoT Devices in Home Insurance Enhancing Safety and Risk Mitigation

Smart home and Internet of Things (IoT) devices are revolutionizing the home insurance…

Parametric Insurance: Leveraging Data and Technology for Rapid Payouts

Parametric insurance is a type of insurance that pays out a predetermined amount…

Microinsurance and Insurtech: Extending Insurance Coverage to the Underinsured

Microinsurance, coupled with insurtech, has the potential to extend insurance coverage to the…

The Symbiotic Relationship Between Digital Platforms & The Burgeoning Landscape Of InsurTech

The world is growing towards digital platforms making the work swift and easier.…

Insurtech Partnerships: Collaboration between Traditional Insurers and Tech Startups

Insurtech partnerships between traditional insurers and tech startups are becoming increasingly common and…

The Role of Big Data Analytics in Insurtech: Unlocking Insights for Risk Assessment

Big data analytics plays a crucial role in insurtech by unlocking valuable insights…

Elevating Insurance Technology: Innovative Solutions Driving Industry Advancement

World is going digitized, and every business sector is restoring its position in…

Retail Tech CEO's

Enhancing Online Shopping Experiences: Leveraging Augmented Reality for Immersive Product Visualization in the CPG Space

Leveraging augmented reality (AR) for immersive product visualization in the consumer packaged goods (CPG) space can significantly enhance the online shopping experience, allowing consumers to…

Optimizing Manufacturing Processes: The Integration of Robotic Automation for Efficiency and Quality Assurance in CPG

In the dynamic landscape of Consumer Packaged Goods (CPG) manufacturing, optimizing processes is crucial for maintaining competitiveness and meeting evolving consumer demands. The integration of robotic…

The Social Commerce Phenomenon: Understanding its Impact and Strategies for Retailers and CPG Brands to Capitalize

The emergence of social commerce, the integration of social media and e-commerce, has significantly transformed the retail landscape. Understanding its impact and devising strategies to capitalize…

Navigating the Digital Revolution: Key Considerations and Best Practices for Retailers Embarking on Transformational Journeys

The digital revolution has fundamentally transformed the retail landscape, reshaping consumer behavior, expectations, and preferences. To thrive in this rapidly evolving environment, retailers must embark on…

Blurring the Lines Between Online and Offline: Strategies for Retailers to Embrace Digital Transformation and Stay Competitive

The retail landscape is undergoing a significant transformation as digital technologies continue to reshape consumer behavior and expectations. To stay competitive in this evolving environment, retailers…

Immersive Retail: Exploring the Role of Augmented Reality Technology in Redefining the In-Store Shopping Experience

In today's digital age, retailers are leveraging augmented reality (AR) technology to transform the in-store shopping experience into an immersive and interactive journey. By blending digital…

Predictive Insights: How Machine Learning Algorithms Are Revolutionizing Demand Forecasting for CPG Companies

In the fast-paced world of Consumer Packaged Goods (CPG), accurate demand forecasting is critical for optimizing inventory levels, minimizing stockouts, and meeting customer expectations. Traditional forecasting…

Digitizing the Supply Chain: Leveraging Technology to Improve Visibility, Efficiency, and Resilience for Retail Operations

The modern retail landscape is characterized by complexity, with supply chains spanning multiple geographies and involving numerous stakeholders. To navigate this complexity and stay competitive, retailers…

Streamlining E-commerce Operations: The Role of Automation in Fulfillment Processes for Retailers

In the dynamic world of e-commerce, efficiency in fulfillment processes is paramount for retailers to meet customer expectations and stay competitive. Automation plays a pivotal role…

Enhancing Online Shopping Experiences: Leveraging Augmented Reality for Immersive Product Visualization in the CPG Space

Leveraging augmented reality (AR) for immersive product visualization in the consumer packaged goods (CPG) space can significantly enhance the online shopping experience, allowing consumers to interact…

Personalized Marketing in the Digital Age: Leveraging Data-Driven Strategies to Engage and Retain Customers in Retail

In today's digital age, consumers expect personalized experiences from the brands they interact with. Personalized marketing, powered by data-driven strategies, has become a cornerstone of successful…

RETAIL

Augmented Reality in Retail: Virtual Try-On and Enhanced In-Store Experiences

Augmented Reality (AR) is transforming the retail industry by offering immersive and interactive experiences for customers. AR technology…

Cashierless Stores and Autonomous Checkout Systems

Cashierless stores and autonomous checkout systems are innovative technologies that are transforming the retail industry. These systems leverage…

Embracing the Digital Frontier: The Transformational Shift from Traditional Retail to Online Shopping

Dated back to the 1980’s and early 1990’s, shopping was a really time consuming but hectic task, when…

Inventory Management and Demand Forecasting with Big Data

Inventory management and demand forecasting are critical aspects of supply chain management, and big data analytics has revolutionized…

Artificial intelligence (AI)-Powered Retail: Revolutionizing the Shopping Experience

AI-powered retail is indeed revolutionizing the shopping experience in various ways, enhancing both online and offline shopping for…

Personalization and Customer Loyalty Programs in the Digital Age

Personalization and customer loyalty programs have become essential strategies for businesses in the digital age. Here's how these…

Balancing the Impact of Advertisements Against the Depth of Narrative Storytelling in Effective Communication Strategies

This highly competitive world totally depends on show off. Audience always wishes to watch something better something creative,…

The Retail Revolution Unfolds: A Comprehensive Exploration of the Profound Evolution Reshaping Consumer Markets

Retail is constantly transforming its face and now with latest four revolutions a great enhancement has been noticed…

Do consumer interaction ways are changing for the retailer, if yes then how?

Since the pandemic hit the world, almost all business sectors have gone through a noticeable change and the…

Artificial Intelligence in Retail: Personalized Customer Engagement and Recommendations

Artificial Intelligence (AI) is revolutionizing the retail industry by enabling personalized customer engagement and recommendations. Here's how AI…

Social Commerce: Influencer Marketing and Shoppable Social Media

Social commerce refers to the merging of social media and e-commerce, where social media platforms are used as…

How Retail Sector Can Hold Their Customers For The Long Term & Reduce Their Expenses?

Since 2020, the world has undergone a lot of changes and a leap in the e-commerce sector. Where…

Revolutionizing Retail: Expert Strategies for Sustainable Practices in the Retail Sector

Retail is one of the widest sectors in the business industry. All those businesses which don’t manufacture or…

Data Analytics and Predictive Modeling in Retail: Understanding Consumer Behavior

Data analytics and predictive modeling are powerful tools in the retail industry that help businesses understand and predict…

FinTech CEO's

Revolutionizing Customer Service in Banking: The Emergence and Advantages of AI-Powered Chatbots

In recent years, the banking sector has witnessed a paradigm shift in customer service with the emergence of AI-powered chatbots. These intelligent virtual assistants are…

Quantum Computing: Exploring its Potential Applications in Financial Modeling and Analysis for the BFSI Sector

Quantum computing has emerged as a disruptive technology with the potential to revolutionize various industries, including the Banking, Financial Services, and Insurance (BFSI) sector. Its…

Robo-Advisors: Transforming Wealth Management Practices through Automated and Data-Driven Solutions

Robo-advisors have emerged as disruptive forces in the wealth management industry, revolutionizing traditional practices through automation and data-driven solutions. These digital platforms utilize algorithms and…

Real-Time Payments: Accelerating Transactions and Driving Financial Inclusion in the Digital Economy

Real-time payments have emerged as a transformative force in the digital economy, offering instant, seamless, and secure transactions that enable individuals and businesses to exchange…

Personalized Financial Services Apps: Enhancing Customer Experience through Data-Driven Insights

In today's digital age, financial services apps are at the forefront of delivering personalized experiences to customers. By harnessing the power of data-driven insights, these…

The Future of Mobile Payments: Trends, Technologies, and their Implications for the BFSI Sector

Mobile payments have emerged as a transformative force in the financial services landscape, offering convenience, speed, and accessibility to consumers worldwide. As technology continues to…

Unlocking Innovation with Open Banking APIs: Fostering Collaboration and Accelerating Development in the BFSI Sector

Open Banking APIs have emerged as powerful tools for fostering collaboration, driving innovation, and accelerating development in the Banking, Financial Services, and Insurance (BFSI) sector.…

Enhancing Security in Financial Services: Leveraging Artificial Intelligence for Fraud Detection and Prevention

In an increasingly digital world, financial services are faced with escalating cybersecurity threats, particularly in the realm of fraud. As traditional methods of fraud detection…

AI-Powered Wealth Management Solutions: Empowering Financial Advisors with Intelligent Insights and Recommendations

Artificial Intelligence (AI) is revolutionizing the wealth management industry by empowering financial advisors with intelligent insights and personalized recommendations. These AI-powered solutions leverage advanced algorithms,…

Navigating Regulatory Challenges: Safeguarding Data Privacy in the BFSI Sector

The Banking, Financial Services, and Insurance (BFSI) sector handle vast amounts of sensitive financial and personal data, making data privacy a paramount concern. Regulatory frameworks…

Insurtech Innovations: How Technology is Transforming the Insurance Landscape

The insurance industry is undergoing a significant transformation driven by technological advancements and innovation. Insurtech, a term used to describe technology-enabled innovations in insurance, is…

FINANCE

Insurtech: Innovations in Insurance Technology and Customer Experience

Insurtech, a term combining "insurance" and "technology," refers to the use of technology and digital innovations to transform the insurance industry. Insurtech companies are…

AI-powered Chatbots and Virtual Assistants in Banking: Enhancing Customer Service

AI-powered chatbots and virtual assistants are transforming the landscape of customer service in the banking industry. They offer several benefits that enhance customer service…

Robo-Advisors and Automated Investing: Shaping the Future of Wealth Management

Robo-advisors and automated investing are indeed shaping the future of wealth management. These technologies leverage artificial intelligence, machine learning, and data analysis to provide…

Contactless Payments and NFC Technology: The Future of Retail Transactions

Contactless payments and Near Field Communication (NFC) technology are reshaping the future of retail transactions. Here's how they are transforming the way we pay:…

Fintech Partnerships: Collaborations between Traditional Institutions and Tech Startups

Fintech partnerships, collaborations between traditional financial institutions and technology startups, have emerged as a powerful force in driving innovation and transforming the financial industry.…

Redefining Finance: The Rise of Fintech and the Evolution of Financial Technology

Fintech may sound very different but in simple language it is financial technology. Along with other business sectors, technology has a great impact on…

Peer-to-Peer Lending and Crowdfunding: Transforming the Future of Financing

Peer-to-peer (P2P) lending and crowdfunding are innovative financing models that have transformed the way individuals and businesses access funding. These models leverage technology to…

Adopting Artificial Intelligence and Machine Learning in BFSI: CIOs’ Dilemma

"Adopting AI and Machine Learning in BFSI: CIOs' Dilemma" is a hypothetical exploration of the challenges and considerations that Chief Information Officers (CIOs) in…

The Future of Financial Inclusion: Fintech’s Impact on Underbanked Populations

Fintech has the potential to significantly impact the financial inclusion of underbanked populations, who have limited or no access to traditional banking services. Fintech…

Open Banking: Transforming the Future of Financial Services

Open banking is indeed transforming the future of financial services. It refers to a system that allows customers to grant third-party financial service providers…

Artificial Intelligence (AI) in Fintech: Revolutionizing Financial Services

Artificial Intelligence (AI) has indeed revolutionized the financial services industry, including the field of fintech (financial technology). AI technologies have brought about significant advancements…

Robo-Advisor and its key benefits in Financial Technology

Financial planning is the backbone for every type of business to operate efficiently to achieve the targeted objective. A single mistake in the financial…

Blockchain CEO's

Beyond Bitcoin: Exploring the Potential of Blockchain Technology in Real-World Applications

Beyond Bitcoin: Exploring the Potential of Blockchain Technology in Real-World Applications" delves into the various practical applications of blockchain technology beyond cryptocurrencies like Bitcoin. Here's…

Tokenomics 101: Demystifying Token Economics and Value Creation in Blockchain Projects

Tokenomics 101: Demystifying Token Economics and Value Creation in Blockchain Projects" provides an introductory overview of token economics, exploring the principles, mechanisms, and factors that contribute to…

The Role of Oracles in Smart Contracts: Ensuring Trustworthy Data Feeds for Decentralized Applications

The Role of Oracles in Smart Contracts: Ensuring Trustworthy Data Feeds for Decentralized Applications" explores the significance of oracles in smart contract ecosystems, focusing on their role…

Web3.0: The Decentralized Web and Its Potential to Reshape the Internet Landscape

Web3.0: The Decentralized Web and Its Potential to Reshape the Internet Landscape" explores the emerging paradigm of Web3.0, characterized by decentralized architectures, blockchain technology, and user-centric principles.…

Privacy Coins: Balancing Privacy and Regulatory Compliance in Cryptocurrency Transactions

Privacy Coins: Balancing Privacy and Regulatory Compliance in Cryptocurrency Transactions" explores the unique challenges and considerations associated with privacy-focused cryptocurrencies, commonly known as privacy coins. Here's an…

Top 5 Altcoins to Watch in 2024: Potential Gems for Your Investment Portfolio

Chainlink (LINK): Chainlink is a decentralized oracle network that aims to connect smart contracts with real-world data. It enables blockchain platforms to securely interact with external data…

Investing in Bitcoin: Is Now the Right Time to Enter the Market? Expert Insights & Analysis

Determining whether now is the right time to enter the Bitcoin market requires careful consideration of various factors, including market trends, price dynamics, fundamental analysis, and individual…

The Emergence of Central Bank Digital Currencies (CBDCs): Implications for the Future of Money

The Emergence of Central Bank Digital Currencies (CBDCs): Implications for the Future of Money" explores the rise of CBDCs and their potential impact on the global financial…

Navigating the Cryptocurrency Market: Strategies for Successful Long-Term Investment

Navigating the Cryptocurrency Market: Strategies for Successful Long-Term Investment" explores various approaches and best practices for investors looking to build a successful long-term investment strategy in the…

Risk Management in Cryptocurrency Investment: Mitigating Volatility and Maximizing Returns

Risk management is essential in cryptocurrency investment due to the high volatility and inherent uncertainties associated with digital assets. Here's a guide on mitigating volatility and maximizing…

Blockchain Gaming: The Convergence of Gaming and Cryptocurrency in Virtual Economies

Blockchain Gaming: The Convergence of Gaming and Cryptocurrency in Virtual Economies" explores the intersection of blockchain technology and gaming, highlighting the emergence of virtual economies and decentralized…

Carbon Neutral Cryptocurrency: Addressing Environmental Concerns in Blockchain Mining

Carbon Neutral Cryptocurrency: Addressing Environmental Concerns in Blockchain Mining" explores the initiatives and technologies aimed at mitigating the environmental impact of blockchain mining activities, particularly in terms…

Cross-Chain Communication: Enabling Seamless Asset Transfers Across Different Blockchains

Cross-Chain Communication: Enabling Seamless Asset Transfers Across Different Blockchains" explores the technology and protocols facilitating interoperability between distinct blockchain networks, allowing for the frictionless transfer of assets…

BLOCKCHAIN

Smart Contracts in Blockchain: Legal and Regulatory Implications

Smart contracts, which are self-executing agreements coded on the blockchain, present various legal and regulatory implications. While smart contracts offer advantages such as automation, efficiency, and transparency, they also raise unique challenges…

Blockchain and Identity Management: Challenges and Opportunities

Blockchain technology has the potential to revolutionize identity management by providing a secure and decentralized platform for managing personal data. Here are some of the key challenges and opportunities for blockchain-based identity…

Blockchain in Supply Chain: Challenges and Solutions for Traceability and Transparency

Blockchain technology has the potential to revolutionize supply chain management by providing enhanced traceability and transparency. However, there are several challenges that need to be addressed to effectively implement blockchain in supply…

Blockchain in Healthcare: Overcoming Data Privacy and Interoperability Challenges

Blockchain technology has the potential to revolutionize the healthcare industry by providing a secure and decentralized platform for managing patient data. However, there are challenges related to data privacy and interoperability that…

Blockchain and Social Impact: Harnessing Distributed Technology for Positive Change

Blockchain technology has the potential to drive social impact by providing new opportunities for transparency, accountability, and empowerment. Here are some ways in which blockchain can be harnessed for positive change: Transparent…

Blockchain and Data Management: Ensuring Integrity and Consistency

Blockchain technology can be used for data management to ensure the integrity and consistency of data. By leveraging blockchain's decentralized and immutable ledger, data can be securely stored, verified, and shared across…

Essential Insights: Preparing for Blockchain Onboarding with Key Considerations

Blockchain is one of the recently emerging technologies opted by a number of folks. But the reach of blockchain connection is still limited due to lack of knowledge about the technology, it…

Blockchain and Decentralized Finance (DeFi): Challenges and Opportunities

Blockchain technology has given rise to decentralized finance (DeFi), which refers to financial applications that operate on a decentralized network without the need for intermediaries such as banks or financial institutions. DeFi…

Interoperability in Blockchain: Bridging the Gap Between Different Distributed Ledger Technologies

Interoperability in Blockchain: Bridging the Gap Between Different Distributed Ledger Technologies" explores the challenges and solutions associated with connecting disparate blockchain networks to enable seamless data exchange and collaboration. Here's an overview…

Blockchain Privacy: Balancing Transparency and Confidentiality

Blockchain technology is often associated with transparency and immutability, which makes it an ideal platform for recording and verifying transactions. However, this transparency can also present challenges when it comes to privacy.…

Governance in Blockchain Networks: Ensuring Consensus and Decision-Making

Governance in blockchain networks refers to the processes and mechanisms through which decisions are made, rules are established, and consensus is reached among network participants. Effective governance is crucial for maintaining the…

Blockchain for Financial Inclusion: Overcoming Barriers in Unbanked Regions

Blockchain technology has the potential to significantly improve financial inclusion by providing secure and low-cost financial services to unbanked populations. However, there are several barriers that need to be overcome to achieve…

Navigating the Blockchain Landscape: Addressing Challenges and Overcoming Hurdles

Blockchain is one of the latest technologies emerging out as a boon for various trading and finance companies. This technology is summarized as a digital ledger of online transactions decentralized to open…

AI CEO's

Artificial Creativity Unleashed: Exploring AI’s Role in Transforming the Creative Industries, From Music Composition to Visual Arts

Artificial Creativity Unleashed: Exploring AI's Role in Transforming the Creative Industries, From Music Composition to Visual Arts" delves into the burgeoning intersection of artificial intelligence…

Artificial Intelligence in Smart Cities: Transforming Urban Living with Intelligent Technologies

Artificial Intelligence (AI) has the potential to transform urban living through the development of smart cities. Here are some ways AI is being used to create intelligent cities: Traffic Management:…

Climate Intelligence: Leveraging AI to Tackle Climate Change Challenges and Foster Sustainability

Climate Intelligence: Leveraging AI to Tackle Climate Change Challenges and Foster Sustainability" explores the intersection of artificial intelligence (AI) and climate change mitigation, highlighting how AI technologies can be utilized…

The Role of CIOs in AI Governance: Strategies for Establishing Ethical AI Practices

As AI becomes increasingly pervasive in organizations, it is important to establish ethical AI practices to ensure that AI systems are developed and used in a responsible and ethical manner.…

AI Bias: Overcoming Discrimination Challenges in AI Systems

AI bias, or algorithmic bias, is a significant challenge for AI systems, and it occurs when AI algorithms or models produce unfair or discriminatory outcomes based on certain biases. AI…

Addressing Bias in Artificial Intelligence : CIOs’ Efforts in Mitigating Algorithmic Discrimination

Addressing bias in AI is critical for ensuring that these systems are accurate, effective, and ethical. CIOs (Chief Information Officers) play a crucial role in mitigating algorithmic discrimination by ensuring…

Navigating the Ethical Challenges of AI: CIOs’ Role in Ensuring Responsible AI Adoption

As artificial intelligence (AI) continues to transform industries and society, there are growing concerns about the ethical implications of its use. Chief Information Officers (CIOs) have an essential role in…

Transformative Education: Harnessing the Full Potential of AI for Personalized Learning, Adaptive Teaching, and Lifelong Skill Development

Transformative Education: Harnessing the Full Potential of AI for Personalized Learning, Adaptive Teaching, and Lifelong Skill Development" explores the intersection of artificial intelligence (AI) and education, highlighting how AI technologies…

Quantum AI: Unlocking the Power of Quantum Computing for AI Applications

Quantum AI is an emerging field that combines the power of quantum computing with artificial intelligence. Quantum computing, which uses quantum bits (qubits) to perform complex calculations, has the potential…

Artificial Intelligence & Creativity: Advancements, Copyright, & Intellectual Property

AI has shown promise in augmenting human creativity in various fields, including art, music, literature, and design. AI-generated content can also raise important questions around copyright and intellectual property. Here…

Artificial Intelligence & Emotional Intelligence: Exploring the Intersection of Technology & Human

Artificial Intelligence (AI) is often thought of as a purely technical and analytical field, but there is increasing interest in exploring the intersection of technology and human emotions, often referred…

AI Talent Acquisition and Retention: CIOs’ Strategies for Building High-Performing AI Teams

Building a high-performing AI team is critical to the success of any organization looking to leverage AI technologies. As a CIO, there are several strategies you can use to attract…

Ethics by Design: Navigating the Complexities of Fairness, Accountability, Transparency, and Explainability (FATE) in AI Systems

Ethics by Design: Navigating the Complexities of Fairness, Accountability, Transparency, and Explainability (FATE) in AI Systems" explores the principles and challenges associated with integrating ethical considerations into the design, development,…

Regulatory Compliance in the Era of AI: CIOs’ Guide to Navigating Legal and Privacy Requirements

As the use of artificial intelligence (AI) becomes more widespread, regulatory compliance is becoming increasingly important. CIOs (Chief Information Officers) play a critical role in ensuring that AI systems are…

ARTIFICIAL INTELLIGENCE

Mindful Machines: How AI is Transforming Mental Health Diagnosis, Therapy, and Support Systems

Mindful Machines: How AI is Transforming Mental Health Diagnosis, Therapy, and Support Systems" explores the intersection of…

Sports Analytics 2.0: The Role of AI in Enhancing Performance Analysis, Talent Identification, and Fan Engagement

Sports Analytics 2.0: The Role of AI in Enhancing Performance Analysis, Talent Identification, and Fan Engagement" delves…

The Evolution of AI Oversight: From Ethics Boards to Regulatory Frameworks, Addressing the Challenges of AI Governance

The Evolution of AI Oversight: From Ethics Boards to Regulatory Frameworks, Addressing the Challenges of AI Governance"…

Inclusive Technology: Empowering Persons with Disabilities Through AI-Driven Assistive Technologies and Accessibility Solutions

Inclusive Technology: Empowering Persons with Disabilities Through AI-Driven Assistive Technologies and Accessibility Solutions" explores the transformative role…

Symbolic AI Resurgence: Bridging the Gap Between Deep Learning and Symbolic Reasoning for Advanced AI Capabilities

Symbolic AI Resurgence: Bridging the Gap Between Deep Learning and Symbolic Reasoning for Advanced AI Capabilities" explores…

Space Intelligence: How AI is Revolutionizing Space Exploration, Satellite Communications, and Astronaut Assistance

Space Intelligence: How AI is Revolutionizing Space Exploration, Satellite Communications, and Astronaut Assistance" explores the innovative ways…

Quantum AI: Charting the Future of Artificial Intelligence with Quantum Computing Advancements and Breakthroughs

Quantum AI: Charting the Future of Artificial Intelligence with Quantum Computing Advancements and Breakthroughs" explores the intersection…

AI Governance in the Digital Era: Crafting Policies and Regulations to Ensure Responsible Development and Deployment of AI Technologies

AI Governance in the Digital Era: Crafting Policies and Regulations to Ensure Responsible Development and Deployment of…

Agriculture 4.0: AI-Driven Precision Farming, Crop Monitoring, and Sustainable Agriculture Practices Redefining the Agri-Tech Landscape

Agriculture 4.0: AI-Driven Precision Farming, Crop Monitoring, and Sustainable Agriculture Practices Redefining the Agri-Tech Landscape" explores the…

Cyber AI: Fortifying Digital Defenses Against Sophisticated Cyber Threats with AI-Powered Security Solutions

Cyber AI: Fortifying Digital Defenses Against Sophisticated Cyber Threats with AI-Powered Security Solutions" explores the critical role…

AI-Powered Policing: Striking the Balance Between Public Safety and Civil Liberties with Predictive Policing Technologies

AI-Powered Policing: Striking the Balance Between Public Safety and Civil Liberties with Predictive Policing Technologies" delves into…

AI-Generated Narratives: Exploring the Impact of AI-Authored Content on Journalism, Storytelling, and Media Production

AI-Generated Narratives: Exploring the Impact of AI-Authored Content on Journalism, Storytelling, and Media Production" delves into the…

Smart Cities Redefined: AI-Driven Urban Planning, Infrastructure Optimization, and Citizen Services in the Smart Cities

Smart Cities Redefined: AI-Driven Urban Planning, Infrastructure Optimization, and Citizen Services" explores the transformative role of artificial…

CEM CEO's

Proactive Customer Service: Anticipating Needs and Resolving Issues Before They Arise

Proactive customer service involves anticipating customer needs and resolving issues before they arise, enhancing satisfaction and loyalty. Here's how businesses can implement proactive customer service…

Customer Experience Metrics and Measurement: Evolving Approaches for Success

Measuring customer experience is essential for understanding how well a business is meeting the needs of its customers and identifying areas for improvement. However, traditional metrics such as customer…

Digital Accessibility: Ensuring Inclusive Customer Experiences for All Users

Digital accessibility involves designing and developing digital products, platforms, and content in a way that ensures equal access and usability for all users, including those with disabilities. Ensuring inclusive…

Gaming and Gamification in Customer Experience: Driving Engagement and Loyalty

Gaming and gamification are increasingly being used by businesses to enhance the customer experience and drive engagement and loyalty. Gamification is the use of game mechanics and design in…

Personalized Recommendations and Customer Experience: Harnessing the Power of AI and Algorithms

Personalized recommendations powered by AI and algorithms have become integral to enhancing the customer experience. By analyzing vast amounts of data, AI algorithms can identify patterns, preferences, and behavior…

Omnichannel Integration: Creating Seamless Customer Journeys Across Platforms

Omnichannel integration involves creating seamless and cohesive customer journeys across multiple channels and touchpoints, including physical stores, websites, mobile apps, social media platforms, and customer service channels. Here's how…

Internet of Things (IoT) and Customer Experience: Creating Connected Experiences

The Internet of Things (IoT) has the potential to transform customer experiences by creating connected experiences. By integrating IoT devices and data, companies can collect real-time information about their…

The Rise of Voice Commerce: Enhancing Customer Engagement and Convenience

The rise of voice commerce, facilitated by virtual assistants and smart speakers, is transforming the way customers interact with brands and make purchases, enhancing engagement and convenience. Here's how…

The Future of Customer Feedback: Innovations in Surveys, Sentiment Analysis, and Voice of the Customer

The future of customer feedback is being shaped by new technologies and innovative approaches to gathering and analyzing customer feedback. Here are some of the trends and innovations that…

Customer Data Platforms (CDPs): Unlocking Insights for Enhanced Customer Understanding

Customer Data Platforms (CDPs) play a crucial role in unlocking insights for enhanced customer understanding by aggregating, consolidating, and analyzing customer data from multiple sources. Here's how CDPs help…

Virtual Reality (VR) Shopping Experiences: Redefining Retail in the Digital Age

Virtual Reality (VR) shopping experiences have the potential to redefine retail in the digital age by providing immersive, interactive, and personalized experiences for customers. Here are some ways VR…

CEM 2.0: Redefining Customer Experience Strategies for the Digital Age

Customer Experience Management (CEM) 2.0 represents a paradigm shift in how businesses approach customer experience strategies, leveraging digital technologies and data-driven insights to create personalized, seamless, and engaging customer…

CUSTOMER EXPERIENCE

Eco-Friendly CX: Aligning Sustainability Efforts with Customer Experience Strategies

Aligning sustainability efforts with customer experience (CX) strategies involves integrating eco-friendly practices and initiatives into the customer journey to enhance environmental responsibility and customer satisfaction.…

Omnichannel Integration: Creating Seamless Customer Journeys Across Platforms

Omnichannel integration involves creating seamless and cohesive customer journeys across multiple channels and touchpoints, including physical stores, websites, mobile apps, social media platforms, and customer…

Conversational AI: Revolutionizing Customer Service and Support Interactions

Conversational AI is revolutionizing customer service and support interactions by providing efficient, personalized, and scalable solutions for businesses. Here's how conversational AI is transforming customer…

Human-Centered Design Thinking in CX: Putting Customers at the Heart of Innovation

Human-centered design thinking in customer experience (CX) involves prioritizing the needs, preferences, and experiences of customers throughout the innovation and design process. By putting customers…

Data-Driven CX Design: Leveraging Analytics to Optimize Customer Journey Mapping

Data-driven customer experience (CX) design involves leveraging analytics and insights to optimize customer journey mapping and enhance the overall customer experience. Here's how businesses can…

Customer Data Platforms (CDPs): Unlocking Insights for Enhanced Customer Understanding

Customer Data Platforms (CDPs) play a crucial role in unlocking insights for enhanced customer understanding by aggregating, consolidating, and analyzing customer data from multiple sources.…

Proactive Customer Service: Anticipating Needs and Resolving Issues Before They Arise

Proactive customer service involves anticipating customer needs and resolving issues before they arise, enhancing satisfaction and loyalty. Here's how businesses can implement proactive customer service…

Community-Centric Customer Engagement: Building Loyalty Through Online Communities

Community-centric customer engagement involves building and nurturing online communities to foster brand loyalty, advocacy, and long-term relationships with customers. Here's how businesses can leverage online…

CX Metrics and Measurement: Evaluating and Improving Customer Experience Performance

Evaluating and improving customer experience (CX) performance requires the use of key metrics and measurements to assess various aspects of the customer journey and identify…

Emotional Intelligence in Customer Experience: Understanding and Responding to Customer Emotions

Emotional intelligence in customer experience involves understanding and responding to customer emotions effectively to build stronger relationships, foster loyalty, and drive satisfaction. Here's how businesses…

The Role of Augmented Reality in Enhancing In-Store and Online Shopping Experiences

Augmented Reality (AR) technology is revolutionizing both in-store and online shopping experiences by providing immersive, interactive, and personalized experiences for customers. Here's how AR enhances…

AutoTech CEO's

Autonomous Driving Revolution: Advancements and Challenges

The autonomous driving revolution represents a paradigm shift in transportation,…

Sustainable Materials in Automotive Manufacturing: Trends and Innovations

Sustainable materials in automotive manufacturing have become increasingly important as the industry seeks to reduce its environmental footprint and meet regulatory requirements. Here are some trends…

Technologies Used In Automobiles

Electronic technology in cars is becoming as crucial as what is under the hood these days. From security and safety to communication and connectivity these strategies…

The Evolution of Mobility as a Service (MaaS): From Concept to Reality

Mobility as a Service (MaaS) has evolved from a conceptual framework to a tangible reality, reshaping the way people access and use transportation services. Here's a…

Predictive Maintenance in Automotive: Preventing Breakdowns and Optimizing Performance

Predictive maintenance in the automotive industry is revolutionizing traditional maintenance practices by leveraging data analytics, machine learning algorithms, and sensor technologies to prevent breakdowns and optimize…

Connected Car Ecosystems: Enhancing Safety and Convenience

The concept of connected car ecosystems revolves around integrating vehicles with the broader digital environment, including infrastructure, other vehicles, and external services. This integration offers a…

5G Connectivity in Cars: Accelerating Data Transfer and Vehicular Communication

5G connectivity in cars represents a significant leap forward in automotive technology, offering faster data transfer speeds, lower latency, and enhanced vehicular communication capabilities. Here's how…

Emissions Reduction Strategies in Automotive: Achieving Environmental Targets

Emissions reduction strategies in the automotive industry are essential for achieving environmental targets and mitigating the impact of transportation on air quality and climate change. Here…

Next-Generation Batteries: Powering the Future of Automotive Electrification

Next-generation batteries are poised to play a pivotal role in powering the future of automotive electrification, offering improvements in energy density, charging speed, lifespan, and safety.…

AI-Driven Vehicle Intelligence: Transforming the Driving Experience

AI-driven vehicle intelligence is revolutionizing the driving experience by leveraging artificial intelligence (AI) algorithms to enhance safety, efficiency, and convenience. Here's how AI-driven vehicle intelligence is…

Innovations In The Automotive Industry

Information-oriented technologies play a key role shortly in the automobile industry, according to a comprehensive analysis of industry trends to expect forward to in 2021. At…

Vehicular Biometrics: Enhancing Security and Personalization in Automotive Systems

Vehicular biometrics involves the integration of biometric authentication and identification technologies into automotive systems to enhance security, safety, and personalization. Here's how vehicular biometrics is enhancing…

AUTOMOTIVE

Cybersecurity in Connected Cars: Protecting Against Vehicle Hacking

As vehicles become more connected and autonomous, ensuring robust cybersecurity measures is crucial to protect against potential vehicle hacking threats. Connected cars rely on various…

The Role of LiDAR and Radar in Autonomous Driving: Sensing the Road Ahead

LiDAR (Light Detection and Ranging) and radar are essential sensor technologies used in autonomous driving systems to perceive the environment and enable safe navigation. They…

Augmented Reality in Automotive: Enhancing Navigation and Driver Assistance

Augmented Reality (AR) technology is making significant strides in the automotive industry, particularly in enhancing navigation systems and driver assistance features. By overlaying virtual information…

Connected Cars: The Intersection of Technology and Mobility

Connected cars represent the convergence of automotive technology and connectivity, revolutionizing the driving experience and transforming mobility. These vehicles are equipped with advanced communication systems…

Autonomous Driving: Advancements in Self-Driving Cars and Safety Features

Autonomous driving, also known as self-driving cars or autonomous vehicles (AVs), is a rapidly evolving technology that has the potential to transform the transportation industry.…

Automotive Technology For Connectivity

Automobile technicians examine, diagnose, and repair mechanical, electronic systems, and electrical as well as components of automobiles. They may specialize in a particular manufacturer's automobiles,…

Tesla also affected due to corona virus

Tesla, one of the biggest and the most renowned name in the technology sector with great innovations transforming the world was latest in talks due…

The Future of Automotive Technology: Innovations Driving the Industry Forward

image Credit: Guardian Safe and Vault The automotive industry is experiencing rapid advancements driven by emerging technologies that are shaping the future of transportation. Here…

5G and Vehicle Connectivity: Enabling High-Speed Communication and Updates

The advent of 5G technology is poised to transform vehicle connectivity by enabling high-speed communication and updates. With its faster data transmission, lower latency, and…

Electric Vehicles (EVs) and the Future of Sustainable Transportation

Electric Vehicles (EVs) play a vital role in the future of sustainable transportation. As the world seeks to reduce greenhouse gas emissions and mitigate the…

Advanced Driver-Assistance Systems (ADAS): A Pathway to Autonomous Vehicles

Advanced Driver-Assistance Systems (ADAS) serve as a crucial stepping stone on the pathway towards fully autonomous vehicles. ADAS technologies integrate sensors, AI algorithms, and connectivity…

Artificial Intelligence in Automotive: Enhancing Driver Assistance and Personalization

Artificial Intelligence (AI) is playing a significant role in the automotive industry, particularly in the development of advanced driver assistance systems (ADAS) and personalized driving…

EduTech CEO's

Virtual Reality in the Classroom: Immersive Experiences and Simulations

Virtual Reality (VR) in the classroom is transforming traditional education by providing immersive experiences and simulations that enhance learning. By leveraging VR technology, students can…

The Future of Education Technology: Transforming Learning Experiences

The future of education technology (EdTech) holds immense potential for transforming learning experiences and shaping the future of education. As technology continues to advance, several key trends and innovations are expected to have a significant…

Open Educational Resources (OER): Accessible and Free Learning Materials

Open Educational Resources (OER) are educational materials that are freely available for anyone to use, modify, and share. These resources include textbooks, lecture notes, course materials, videos, simulations, quizzes, and other learning resources that are…

Technology reshaping the world of education

Technology is transforming education, changing how, when, and where students learn and empower them at every stage of their journey. With the ultimate aim to personalize the learning process, the technology empowers students by giving…

Tech-Enabled Learning: Maximizing Educational Impact Through Technology

Technology is revolutionizing the complete world in a better way. It has transformed education also, by empowering it by giving them the ownership of how they learn and making education relevant to their digital lives.…

Gamification of Education: Engaging and Motivating Students through Games

Gamification of education refers to the integration of game elements and mechanics into the learning process to engage and motivate students. By applying game design principles, educators can make learning more enjoyable, interactive, and immersive.…

Tips for developing an inclusive education system

In today’s era, where everyone is deeming for human rights equality are highly working for the equality of genders, cast, creed, and other races. “What about the equality of differently abled folks?” Don’t they have…

Navigating the Educational Landscape: Addressing Today’s Major Challenges

There is a saying that “education is the only weapon that can change the world”. There are various sectors operating all across the world and each of them are working on various dimensions, but the…

Recent trends of education sector in 2020

From the digitized educational campuses till digital library, today’s teachers are using latest technologies to bring a revolution in education sector and change the latest teaching methodologies. This era is witnessing a new phase where…

EDUCATION

Adaptive Learning Platforms: Customized Instruction and Assessment

Adaptive learning platforms are educational tools that leverage technology and data to provide customized instruction and assessment tailored to individual learners. These platforms use artificial…

Digital Assessment Tools: Streamlining Evaluation and Feedback

Digital assessment tools are revolutionizing the evaluation and feedback processes in education. By leveraging technology, these tools streamline assessment procedures, provide immediate feedback, and offer…

Augmented Reality in Education: Enhancing Visualization and Interactive Learning

Augmented Reality (AR) is a technology that overlays digital information and virtual objects onto the real world, enhancing the learning experience by merging the physical…

EdTech Startups: Innovations Shaping the Future of Education

EdTech (Educational Technology) startups are driving innovation and shaping the future of education by leveraging technology to improve teaching and learning experiences. These startups are…

Cloud Computing in Education: Collaborative Learning and Data Storage

Cloud computing is revolutionizing the field of education by providing scalable, flexible, and accessible solutions for collaborative learning, data storage, and educational resources. Cloud computing…

STEM Education and Robotics: Building Future-ready Skills

STEM (Science, Technology, Engineering, and Mathematics) education plays a crucial role in preparing students for the challenges and opportunities of the future. Integrating robotics into…

Learning Management Systems (LMS): Centralized Platforms for Course Delivery

Learning Management Systems (LMS) are centralized platforms that provide educational institutions with a comprehensive solution for course delivery, management, and administration. LMS platforms offer a…

Internet of Things (IoT) in Education: Smart Classrooms and Campus Management

The Internet of Things (IoT) is revolutionizing education by transforming classrooms and campus management into smart and connected environments. IoT technology in education enables the…

Mobile Learning: Empowering Education on the Go

Mobile learning, also known as m-learning, refers to the use of mobile devices and technologies to facilitate learning anytime and anywhere. With the widespread availability…

MedTech CEO's

Robotics in Surgery: Advancements in Minimally Invasive Procedures

Robotics in surgery has revolutionized the field by enabling advancements in minimally invasive procedures. Minimally invasive surgery (MIS), also known as robotic-assisted surgery or robot-assisted…

Cyber-attacks on supply chain management during vaccine delivery

Cyber-attacks with a specific target- There is more to this issue than meets the eye. When considering the complete supply chain, one must consider transportation businesses, manufacturers, distributors, and R&D…

Artificial Intelligence in Medicine: Revolutionizing Diagnosis and Treatment

Artificial Intelligence (AI) has emerged as a transformative technology in the field of medicine, revolutionizing diagnosis and treatment processes. By leveraging vast amounts of medical data, advanced algorithms, and machine…

Medical sector to witness a great change in the upcoming decade

A study conducted over the medical sector depicts that a dynamic transformation is about to witness. Healthcare technologies are going to revolutionize the medical sector, such as artificial intelligence, big…

To persuade physicians to trust remote health monitoring programs

COVID-19 has accelerated the implementation of telehealth as well as remote patient monitoring around the world. This has given health systems the potential to digitally overhaul their patient care approach.…

Rethinking healthcare services with AI-enabled physicians

While many physicians have been cautious about artificial intelligence in clinical settings in the past, in today's fast-changing healthcare sector, many are considering how AI may enhance the quality of…

The Future of Medical Technology: Transforming Healthcare Delivery

The future of medical technology holds great potential for transforming healthcare delivery and improving patient outcomes. Advancements in various fields, including robotics, artificial intelligence (AI), genomics, telemedicine, and nanotechnology, are…

Bioprinting and Organ Transplantation: Overcoming Organ Shortage

Bioprinting is an innovative technology that has the potential to overcome the shortage of organs available for transplantation by creating functional, patient-specific organs and tissues. Bioprinting combines 3D printing techniques…

Organization and clinical hurdles during vaccination campaigns

The global rollout of vaccines provides promise, but we must equally recognize the enormous hurdles of distributing and administering vaccinations at scale. The logistical difficulties are well chronicled, but cyber…

How might health IT systems be optimized for immunization campaigns?

The COVID-19 immunization program could become a game-changer for healthcare businesses if the correct technology applications are used. COVID-19 vaccination campaigns, on the other hand, are being prepared in partnership…

MEDICAL TECHNOLOGY

Gene Editing and CRISPR Technology: Transforming Genetic Therapies

Gene editing, particularly through the revolutionary CRISPR-Cas9 technology, is transforming the field of genetic therapies by offering precise and efficient tools to modify the DNA…

Telemedicine and Remote Patient Monitoring: Advancements in Virtual Care

Telemedicine and remote patient monitoring have experienced significant advancements in recent years, revolutionizing the delivery of healthcare and expanding access to medical services. These technologies…

Smart Pills and Digital Therapeutics: Innovations in Medication Adherence

Smart pills and digital therapeutics are two innovative approaches that are transforming medication adherence, improving patient outcomes, and enhancing healthcare delivery. Let's take a closer…

Point-of-Care Testing: Portable Devices for Rapid Diagnosis

Point-of-care testing (POCT) refers to diagnostic tests performed at or near the site of patient care, providing rapid results that can aid in immediate clinical…

Medical Drones: Delivering Supplies and Emergency Care in Remote Areas

Medical drones have emerged as a promising technology for delivering medical supplies and emergency care to remote and inaccessible areas. These unmanned aerial vehicles (UAVs)…

Virtual Reality in Medical Training: Simulating Surgical Procedures and Education

Virtual Reality (VR) is playing an increasingly significant role in medical training by providing immersive and realistic simulations of surgical procedures and educational experiences. VR…

Brain-Computer Interfaces: Restoring Functionality and Treating Neurological Disorders

Brain-computer interfaces (BCIs) are innovative technologies that establish a direct communication pathway between the brain and external devices or computer systems. BCIs hold great potential…

Regenerative Medicine: Harnessing Stem Cells for Tissue Repair and Regrowth

Regenerative medicine is an innovative field that aims to restore or replace damaged tissues and organs by harnessing the power of stem cells and other…

Nanotechnology in Healthcare: Targeted Drug Delivery and Disease Detection

Nanotechnology has revolutionized the field of healthcare by enabling precise and targeted drug delivery systems and advanced disease detection methods. By manipulating materials at the…

Medical Imaging Advancements: High-resolution Scans and AI-assisted Diagnostics

Medical imaging has witnessed significant advancements, driven by technological innovations and the integration of artificial intelligence (AI). These advancements have led to high-resolution scans and…

LegalTech CEO's

Artificial Intelligence in Law: Transforming Legal Research and Document Analysis

Artificial intelligence (AI) is transforming the field of law by automating and enhancing various legal processes. In particular, AI is revolutionizing legal research and document…

Technologies that makes legal job easier

Law is dynamical so is the technology. Legal technology expedites the lawyers in different tasks. No machine can do the…

Legal Chatbots and Virtual Assistants: Automating Client Interactions and Support

Legal chatbots and virtual assistants are emerging technologies in the legal industry that automate client interactions and provide support. These…

The Future of Legal Technology: Innovations Reshaping the Legal Industry

The legal industry is undergoing a transformation with the advent of new technologies that are reshaping the way legal professionals…

Boost up law firms with virtual assistants

There are niche disciplines that legal areas are split into that needs expertise. It can be an enterprise or an…

The exigency for legal tech

The world is confronting disruptions that nobody set it up for. Notwithstanding, one thing that has stayed consistent is the…

Blockchain in Legal Contracts: Enhancing Security and Efficiency

Blockchain technology is transforming the way legal contracts are created, executed, and enforced by enhancing security, transparency, and efficiency. Here's…

Data Privacy and Compliance in the Digital Age: Challenges and Solutions

In the digital age, data privacy and compliance have become critical concerns for individuals, organizations, and governments. The widespread collection,…

Cloud Computing in Legal Practice: Collaboration and Data Storage

Cloud computing is revolutionizing the legal practice by enabling seamless collaboration, efficient data storage, and flexible access to information. Here's…

Predictive Analytics in Legal Decision-making: Improving Case Outcomes

Predictive analytics is a technology that utilizes data, statistical algorithms, and machine learning techniques to analyze past and current data…

Smart Contracts: Streamlining Contract Management and Execution

Smart contracts are computer programs that automatically execute predefined actions or conditions based on the terms and conditions written into…

Digital Evidence and E-Discovery: Managing Big Data in Litigation

In the digital age, the volume and complexity of electronic data have increased exponentially, presenting unique challenges in the context…

LEGAL TECHNOLOGY

Legal Research Platforms: Accessing Comprehensive and Relevant Information

Legal research platforms play a crucial role in the legal industry by providing access to comprehensive and relevant information for legal professionals. These platforms leverage…

What is Legal Tech & How it is changing Business Sectors?

Legal sector has a great importance in every business sector but earlier it was a bit hectic and time consuming task. An integration of technology…

Virtual Reality in Courtrooms: Enhancing Visual Presentations and Jury Understanding

Virtual Reality (VR) is being increasingly explored and implemented in courtrooms to enhance visual presentations and improve the understanding of evidence by juries. Here's how…

Legal Document Automation: Streamlining Document Creation and Review

Legal document automation refers to the use of technology to streamline and automate the creation, drafting, and review of legal documents. It involves leveraging software…

The step of legal sector towards technology

There are a number of business sectors operating in the present era and almost among those are rigidly tied with technologies in some or other…

Conveyance deed – A legal document is important; know why?

There are several different property documents or deeds when you are buying or selling properties. The real estate deals are never possible without the presence…

All you need to know about immigration services

Procuring immigration visas vary from country to country, as the legal formalities of immigration services are never the same in each of these countries. There…

Most crucial legal technology development in past few years

Legal sector is one of those sectors which are known to everyone but hardly folks enter in this sector to work with. If you count…

Technology-Assisted Review (TAR) in e-Discovery: Improving Efficiency and Accuracy

Technology-Assisted Review (TAR), also known as predictive coding or machine learning-assisted review, is a powerful tool in the field of e-discovery that utilizes advanced algorithms…

Legal Research and its benefits

Every lawsuit, court appeal, criminal cases, and legal procedures need a certain amount of legal research. Legal research helps in determining the current legal scenarios…

Demystification of legal tech

Lawyers can rationalize their law practices by coalescing tools of legal research and practice management in their law operations. Even a lawyer who still has…

Cybersecurity in the Legal Industry: Protecting Sensitive Client Data

Cybersecurity is a critical concern for the legal industry, as law firms and legal professionals handle vast amounts of sensitive client data. Protecting this data…

GovTech CEO's

The Future of Government Technology: Transforming Public Services

The future of government technology holds tremendous potential for transforming public services and enhancing citizen experiences. As technology continues to advance, governments around the world…

E-Government and Digital Transformation: Improving Access and Service Delivery

E-government and digital transformation initiatives have revolutionized the way governments interact with citizens, businesses, and other stakeholders. By leveraging digital technologies, governments aim to improve access to…

Internet of Things (IoT) for Smart Governance: Enhancing Efficiency and Connectivity

The Internet of Things (IoT) refers to the network of interconnected devices, sensors, and systems that communicate and exchange data over the internet. When applied to smart…

Citizen Engagement and Participatory Governance through Digital Platforms

Citizen engagement and participatory governance are crucial elements of modern democracies. Digital platforms have revolutionized the way governments interact with citizens, enabling greater participation, collaboration, and transparency…

Artificial Intelligence in Public Administration: Streamlining Decision-making and Service Delivery

Artificial Intelligence (AI) is revolutionizing public administration by streamlining decision-making processes and improving service delivery to citizens. AI technologies can automate tasks, analyze vast amounts of data,…

Cloud Computing in Government: Enabling Scalability and Cost Savings

Cloud computing has become an integral part of modern government operations, offering numerous benefits in terms of scalability, cost savings, and efficiency. Here are some key advantages…

Privacy and Data Protection in the Digital Era: Balancing Security and Privacy Rights

In the digital era, privacy and data protection have become critical concerns as individuals and organizations increasingly rely on digital technologies and share personal information online. Balancing…

Blockchain in Government: Improving Transparency and Efficiency

Blockchain technology has the potential to transform the way governments operate by enhancing transparency, improving efficiency, and increasing trust in public services. Blockchain is a decentralized and…

Data Analytics for Evidence-based Policy-making and Decision-making

Data analytics plays a crucial role in evidence-based policy-making and decision-making by providing insights and informing strategies based on empirical evidence rather than intuition or assumptions. Here's…

Emerging Technologies in Public Safety: Enhancing Emergency Response and Disaster Management

Emerging technologies are playing a crucial role in enhancing public safety and improving emergency response and disaster management. These technologies leverage advancements in areas such as artificial…

Cybersecurity in Government: Protecting Critical Infrastructure and Data

Cybersecurity in government plays a critical role in protecting essential infrastructure, sensitive data, and ensuring the continuity of public services. As governments increasingly rely on digital systems…

GOVERNMENT & PUBLIC SECTOR

Open Government Data: Empowering Citizens and Driving Innovation

Open Government Data (OGD) refers to the concept of making government data freely available to the public in a machine-readable format. By opening up government…

Modernization by technology

An efficacious government is a cornerstone for developing the country. Also, upheave in citizens expectation has made the government perform well. The potential to meet…

Smart Cities: Harnessing Technology for Sustainable Urban Development

Smart cities are urban areas that leverage technology and data to enhance the quality of life for residents, improve sustainability, and optimize resource management. These…

Government healthcare programs: A step towards a healthier tomorrow

Healthcare is one of the most crucial sectors used by folks regardless of their bank balance, region, or any other race. The facilities provided by…

Protecting sensitive data while working remotely

The past year showed some tempestuousness for consumers and businesses. This crisis has compelled numerous companies in the world to relocate from office to remote…

Efficient government technologies

We are in the era where a blanket of technologies is being unfolded, that is being used in different ways in daily lives, on which…

Major challenges faced by army

Army constitutes a major part of government sector has also plays a crucial role in saving an entire country by protecting its borders from the…

Technology facilitating low-touch economy

Many businesses and workers are initiating new ways in their jobs to suit the low-touch economy. The industries were forced to rapidly adjust to newly…

Regulatory Sandboxes: Fostering Innovation in Government Regulations

Regulatory sandboxes are frameworks or programs that allow businesses, startups, and innovators to test new products, services, or business models in a controlled and supervised…

Communicating legit information in this pandemic

The COVID-19 (coronavirus) is plausibly the pandemic that has worsen the situation in the whole world. Since its inception to date, there have been various…

Top GovTech Trends Revealed for 2021

The year 2021 has come up with a wide array of changes for the entire world. The government is also moving up front to bring…

Strategies to Improve Public Sector Labour Productivity

Labour productive is an essential element for any organization to perform well and achieve intended goal. To keep your organizational working procedure effective and accurate,…

Digital Identity and Authentication: Enhancing Security in Government Services

Digital identity and authentication play a crucial role in enhancing security and trust in government services. As governments increasingly transition towards digital platforms and online…

Telemedicine CEO's

Factors driving telemedicine towards growth

A number of aspects of real technological world are changing our day to day life and medical sector is undergoing through a tremendous change making…

The Role of Wearable Devices in Telemedicine: Monitoring Health from Anywhere

Wearable devices play a significant role in telemedicine by enabling the remote monitoring of patients' health from anywhere. These devices, typically worn on the body or incorporated into clothing, collect…

Telemedicine Integration in Electronic Health Records (EHR): Streamlining Care Coordination

The integration of telemedicine with Electronic Health Records (EHR) systems has proven to be valuable in streamlining care coordination and improving the efficiency of healthcare delivery. Here's how the integration…

Telemedicine and Disaster Response: Providing Medical Care in Crisis Situations

Telemedicine plays a critical role in disaster response by providing remote medical care and support in crisis situations. Here's how telemedicine enhances disaster response efforts: Remote Medical Triage: Telemedicine enables…

The Future of Telemedicine Regulation and Reimbursement: Policy Considerations

The future of telemedicine regulation and reimbursement is a crucial area of focus as telemedicine continues to evolve and expand. Several policy considerations are important for ensuring the effective implementation…

Teleophthalmology: Remote Eye Care and Vision Services

Teleophthalmology is a branch of telemedicine that focuses on providing remote eye care and vision services. It utilizes telecommunication technology to facilitate virtual consultations, diagnosis, and management of eye conditions.…

The Future of Telemedicine: Transforming Healthcare Delivery

The future of telemedicine holds great potential to transform healthcare delivery and improve access to medical services. Telemedicine, also known as telehealth, refers to the use of technology to provide…

Telemedicine and Home Health Monitoring: Enabling Aging in Place